The reign of diverse applications is here! Web, desktop, and mobile applications are now being leveraged by business functions across the organization – from sales, marketing & customer service – to finance, human resources and more! These applications have a single goal – to provide a stunning user experience. To achieve this, organizations –

- Extensively research the latest technologies

- Choose the most suitable platforms to host their applications like Azure, AWS, or Google

- Integrate third party and SaaS-based applications for a seamless UX

While they may provide a seamless user experience, most organizations fail to derive and harness critical insights from their applications once launched. These insights play a key role in both enhancing future application performance and fulfilling strategic business goals.

A 2019 Gartner report suggests, “Through 2022, only 20% of analytic insights will deliver business outcomes”. This clearly states the maturity and success of data analytics projects. What is the cause of this failure? People and processes. Often, organizations lack the skills or processes to effectively harness and leverage the vast amount of data their applications create for meaningful insights.

Organizations that leverage data effectively can quickly mitigate their process, people, and tools-related challenges. Here’s where DataDevOps, also called DataOps can help.

What is DataOps?

DataOps is a common technical platform wherein you can plug-in data and tools from diverse business processes for centralized processing and cross-functional insights. The orchestration of this entire process including ingestion and enrichment is executed within a data factory. This provides the basis for data discovery, analytics, and insights as required by respective business stakeholders.

In this blog, we will guide you through the steps for DataOps implementation and provide a case study that showcases how you can utilize Azure/AWS to achieve your DevOps objectives.

The Value of DataOps

DataOps enables you to analyze and derive insights from data that is generated by every business process and activity in an organization, something that isolated data stores are unable to do.

DataOps implementation answers these technical questions across business processes

- How can we reduce manual work?

- How do we develop new features without impacting the current development?

- Are non-production and production environments segregated?

- How can we continuously collaborate and sync with each other for new feature development?

- Do we need to carry out controlled deployment or governance on important environments (e.g. Production/UAT)

- How can we manually check, identify, and resolve any failures in data pipelines?

- Which would be the best technology to build and verify pipeline with?

A successful DataOps implementation can provide numerous benefits

- Identify hidden patterns and KPIs from business processes to improve functionalities and services and generate insights

- Collate data from disparate but interdependent business functions

- Study the data to find pattern/insights that these data sets could provide

- Automate the insight generation process

- Automate other manual processes like environment provisioning, code and configuration management, infrastructure monitoring, application monitoring, etc.

- Improve error tracking and reporting

Preparing for DataOps: Conducting Data Research

Data Research refers to studying business processes, process-generated data, and identifying KPIs and insights that these data sets could provide together. If/when you’re posed with challenges related to data research, try getting answers to the following questions-

- What kind of data is generated in each process and how are the processes interconnected?

- Is there common data between two datasets that can link them together?

- Is the grain of data uniform across interdependent processes?

- Do we need to roll-up/roll-down data to use it effectively?

- You might observe that joining two datasets on identified common columns does not generate any data. What could be common issues?

- There might be two different words being used to capture the same information. Check for synonyms and account for them.

- There could be other contextual differences, like using spaces and hyphens interchangeably, using different spellings, etc.

Implementing DataOps: Ingesting Data from Source Systems to a Single Datastore

The first and most important task is to bring in data from disparate systems, into one common datastore and then standardize the data format across as many systems as possible.

Some of the common questions you might need to answer during this stage are as follows

- Is the data structured, semi-structured, or unstructured?

- What would be the ideal storage tool and storage format for the data?

- Are there tools to ingest this data into the target system in a reliable way?

- What is the frequency of data refresh?

- Will the data be pulled in or get refreshed incrementally

- Is the data protected? Do any data columns need to be masked?

Implementing DataOps: Generating and Reporting Insights

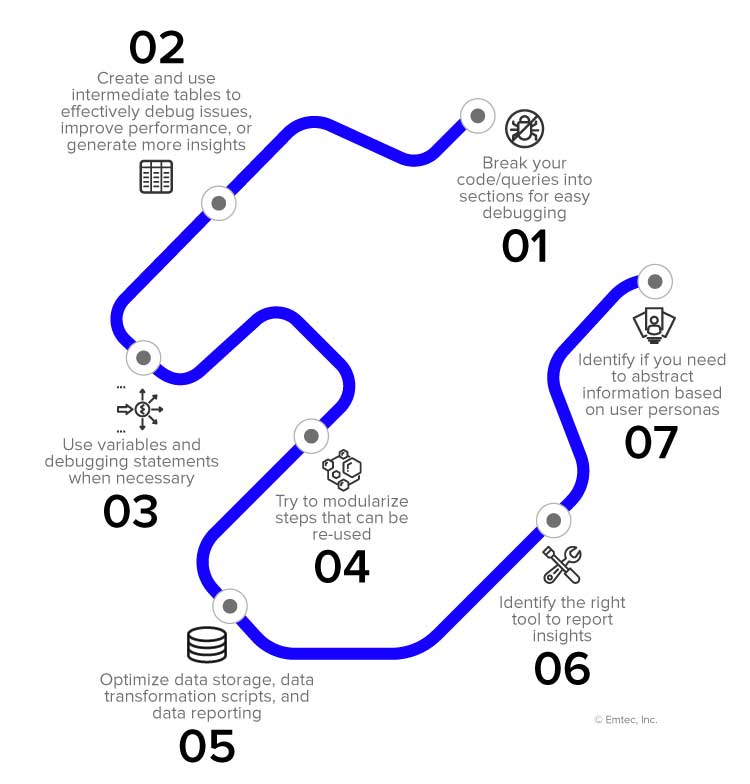

The next step is to use the ingested data to generate insights. This requires creation of script(s) that transform the ingested usable insights/KPIs and create reports to present these insights to the audience. Some of the best practices for this step are –

Implementing DataOps: Automating Insight Generation Process

This step comprises of automating data ingestion and transforming it into insights. This automation is generally called creating “data pipelines”. These “data pipelines” can be scheduled to run automatically or can be configured to execute based on specific triggers (when a previous pipeline finishes, when data is available, or when it is manually executed). Based on the system in use and the type of data, a vast variety of tools and options are available to achieve this. Further, each tool can have multiple ways of creating these pipelines. Here are the steps to create data pipelines-

- Ensure that the pipelines generate abundant logs and send out alerts on failures

- Check that input data is available and is in the expected format

- Check that the output data is generated and is available to downstream systems

- Ensure that any data, even temporary data is cleaned up

Implementing DataOps: Automating other manual tasks

After achieving automation to derive data insights, it is followed by other maintenance processes (such as managing code and infrastructure) free of manual intervention. This also helps reduce human overhead of deploying new versions of the application and pipelines. Some of the benefits gained from this are-

- Code versioning

Git is a very popular tool to store and version code. Several different flavors of git are available, like Gitlab, etc. This is widely supported in most IDEs and enable multiple people to work together without affecting each other’s work or hampering releases. It also provides an effective management tool for project owners to track and review their team’s work.

- Environment provisioning

Most tools/services (AWS, Azure, etc.) allow the automation of infrastructure provisioning and configuration. This not only helps in the initial setup but also during infrastructure updates and disaster recovery processes. Some of the steps that need to be followed are –

- Identify available tools to automate provisioning and choose the right tool

- Identify all required environments

- Separate the configurations used in each environment

- Create scripts to automatically provision infrastructure for each environment

- Code deployment

Scripts and tools (like Jenkins) can be used to deploy an application to its provisioned infrastructure.

- Download/install software, dependencies, etc.

- Deploy code and configurations

- Start any process/codes

- Testing

Writing automated test cases is almost a mandate now. These tests can be executed right after the code is deployed and can be used for a variety of purposes such as-

- Stress testing

- Functional testing

- Schema validation

- Capturing code coverage

- Validating code quality

- Using CICD Tools to Create a DevOps Pipeline

A variety of tools are now available that help create an end-to-end pipeline for infrastructure provisioning, deploying code/reports/configurations, and running automated test cases. These deployment pipelines can even be scheduled to run automatically. Examples include Gitlab CICD and Jenkins.

Implementing DataOps: Other Automation-Related Operations

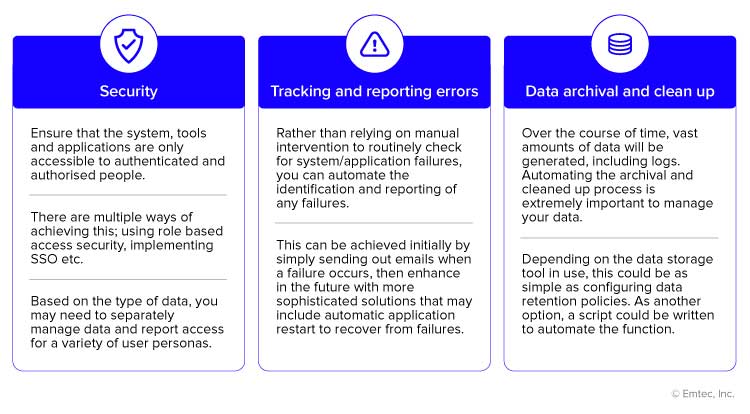

There are a few other automation-related operations which can be incorporated in the later stages of your DataOps implementation-

Conclusion

A successful DataOps implementation can provide numerous benefits

- An agile, system-driven method to deliver superior value from organizational data while reducing project duration

- Project owners empowered to communicate with numerous stakeholders, systems, and solutions to enhance decision-making

- Reducing silos ensures data engineers can operate at their pace while generating insights on data sources

- Aids data, algorithm, and application management using efficient pipelines, thereby enabling automation of numerous business functions

Do you want to successfully implement DataOps and enhance your data analytics efficiency?

Contact our data experts today!